IMX287延迟问题

-

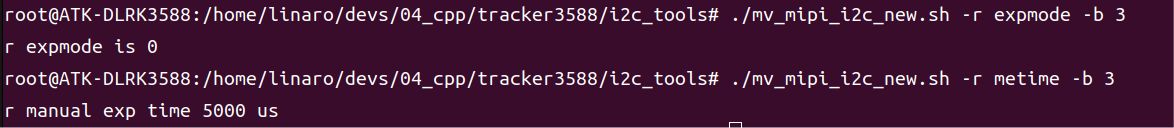

我购买了MV-MIPI-IMX287M相机,并接入到了正点原子RK3588的开发板。在默认配置的基础上,我只修改了曝光为手动曝光,时间为5毫秒

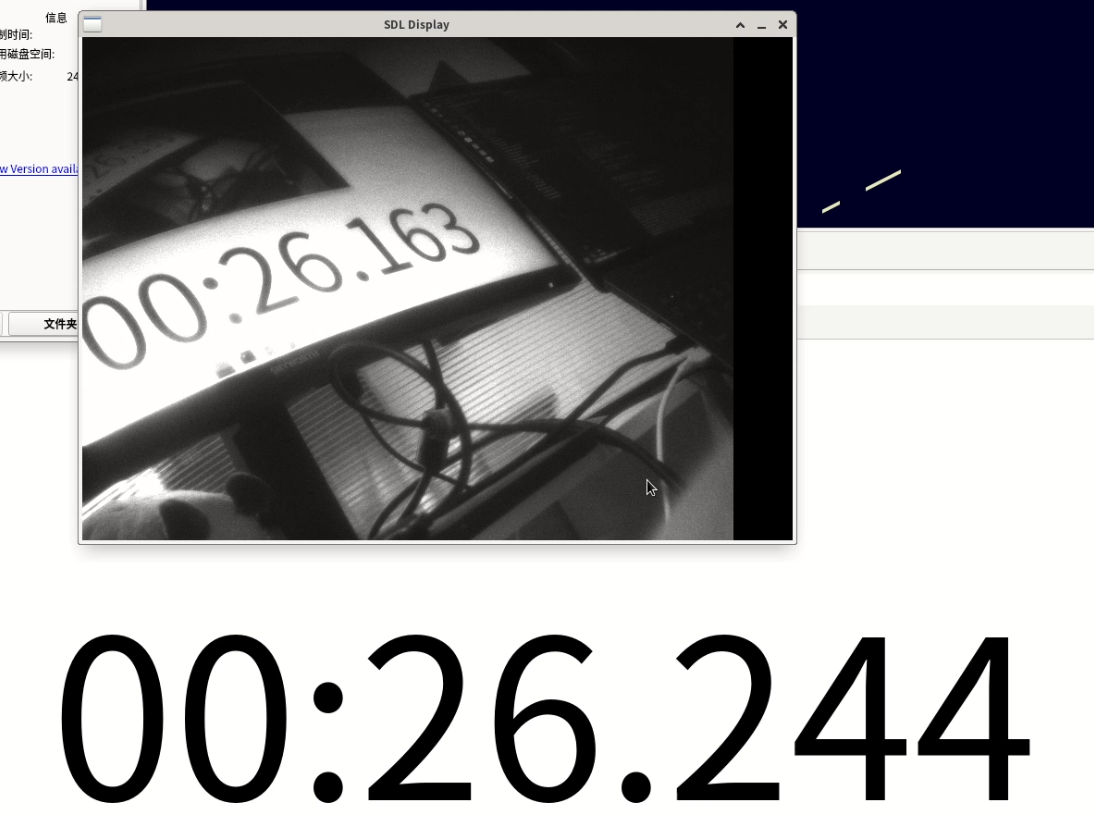

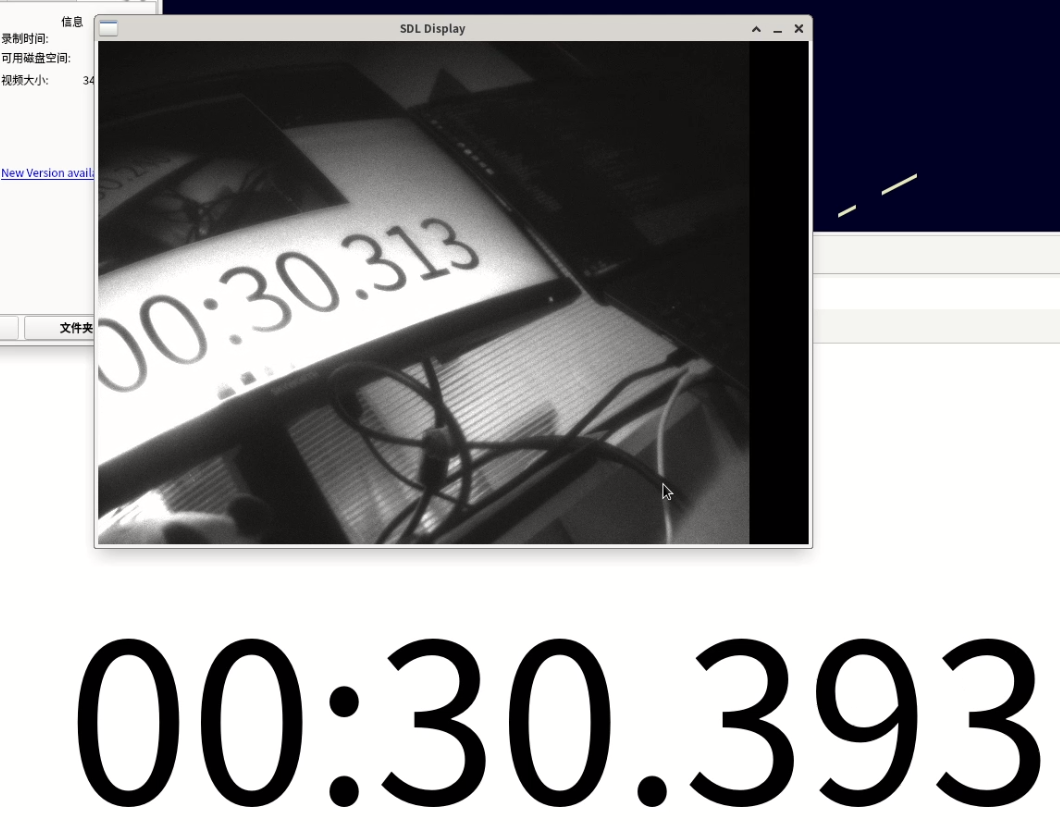

通过V4L2读取相机数据,并通过SDL库,使用HDMI显示实时画面,发现出图延迟约为80ms,如下图所示。这要高于我们之前使用的USB3.0 工业相机(使用同样的IMX287传感器,从捕获到显示信息约50-60ms)

产品链接如下:http://www.cldkey.com/USB3camera/369.html

请问我测得的延时是合理的吗,有没有办法降低出图的延时,我们想用在目标的高精度跟踪下,可以牺牲画质,分辨率。

使用同样的传感器,MIPI相机的出图延迟是不是应该比USB3.0的相机要小呢?

这是我编写的相机数据采集代码// v4l2_reader.h #define BUFFER_COUNT 1 struct buffer { void *start; size_t length; }; class V4l2Reader : public VideoReaderManager { public: V4l2Reader(); ~V4l2Reader(); cv::Mat get_image() override; private: int video_fd = -1; int subdev_fd = -1; struct buffer buffers[BUFFER_COUNT]; enum v4l2_buf_type type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE; }; // v4l2_reader.cpp V4l2Reader::V4l2Reader() { subdev_fd = open("/dev/v4l-subdev2", O_RDWR); if (subdev_fd < 0) { perror("Failed to open subdev"); return; } struct v4l2_subdev_format fmt = { .which = V4L2_SUBDEV_FORMAT_ACTIVE, .pad = 0, .format = { .width = 704, .height = 544, .code = MEDIA_BUS_FMT_Y8_1X8, .field = V4L2_FIELD_NONE, .colorspace = V4L2_COLORSPACE_DEFAULT, }, }; if (ioctl(subdev_fd, VIDIOC_SUBDEV_S_FMT, &fmt) < 0) { perror("Failed to set subdev format"); return; } video_fd = open("/dev/video0", O_RDWR); if (video_fd < 0) { perror("Failed to video device"); return; } // 请求缓冲区 struct v4l2_requestbuffers req = {0}; req.count = BUFFER_COUNT; req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE; req.memory = V4L2_MEMORY_MMAP; if (ioctl(video_fd, VIDIOC_REQBUFS, &req) < 0) { perror("Failed to request video buf"); return; } // 映射缓冲区 for (int i = 0; i < BUFFER_COUNT; ++i) { struct v4l2_buffer buf = {0}; struct v4l2_plane plane = {0}; buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE; buf.memory = V4L2_MEMORY_MMAP; buf.index = i; buf.length = 1; buf.m.planes = &plane; if (ioctl(video_fd, VIDIOC_QUERYBUF, &buf) < 0) { perror("Failed to request query buf"); return; } buffers[i].length = plane.length; buffers[i].start = mmap(NULL, plane.length, PROT_READ | PROT_WRITE, MAP_SHARED, video_fd, plane.m.mem_offset); if (buffers[i].start == MAP_FAILED) { perror("mmap"); return; } } for (int i = 0; i < BUFFER_COUNT; ++i) { struct v4l2_buffer buf = {0}; struct v4l2_plane plane = {0}; buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE; buf.memory = V4L2_MEMORY_MMAP; buf.index = i; buf.length = 1; buf.m.planes = &plane; if (ioctl(video_fd, VIDIOC_QBUF, &buf) < 0) { perror("Failed to insert buf"); return; } } if (ioctl(video_fd, VIDIOC_STREAMON, &type) < 0) { perror("Failed to start streaming"); return; } } V4l2Reader::~V4l2Reader() { if (ioctl(video_fd, VIDIOC_STREAMOFF, &type) < 0) { perror("Failed to stop streaming"); } for (int i = 0; i < BUFFER_COUNT; ++i) { munmap(buffers[i].start, buffers[i].length); } close(subdev_fd); close(video_fd); } cv::Mat V4l2Reader::get_image() { cv::Mat mat(544, 768, CV_8UC1); struct v4l2_buffer buf = {0}; struct v4l2_plane plane = {0}; buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE; buf.memory = V4L2_MEMORY_MMAP; buf.length = 1; buf.m.planes = &plane; if (ioctl(video_fd, VIDIOC_DQBUF, &buf) < 0) { perror("DQBUF failed"); return mat; } memcpy(mat.data, buffers[buf.index].start, 768 * 544); cv::cvtColor(mat, mat, cv::COLOR_GRAY2BGR); if (ioctl(video_fd, VIDIOC_QBUF, &buf) < 0) { perror("Failed to requeue buffer"); } return mat; } // main.cpp int main() { std::shared_ptr<V4l2Reader> v4l2_reader = std::make_shared<V4l2Reader>(); std::shared_ptr<SdlImshow> sdl_imshow = std::make_shared<SdlImshow>(768, 544); cv::Mat frame; while (1) { frame = v4l2_reader->get_image(); sdl_imshow->imshow(frame); } return 0; } -

@linoooooo 你好,我们的相机内部isp流程是没有整帧缓存的,相机本身引入的delay是毫秒之内。

我觉得你这个延时主要是v4l2取图缓存和显示缓存的缘故。不过说实话SDL库我们不太懂,怎样优化需要您自己研究一下。

至于相机采集后,您这边程序直接导入算法的话,延迟肯定要比这种测量方法小的多。